In-Memory Computing

In-memory Computing

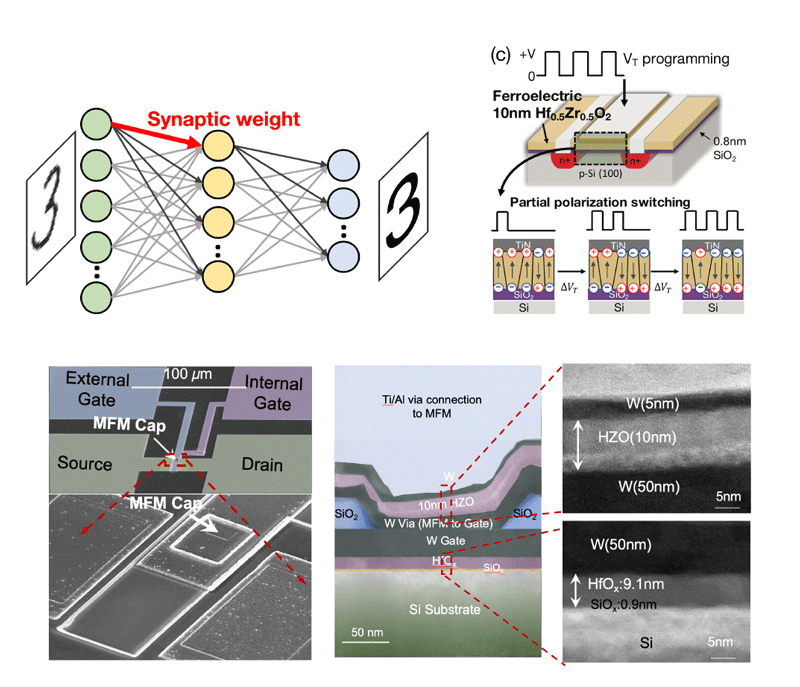

Analog Synapse

The memory requirement of at-scale deep neural networks (DNN) dictate that synaptic weight values be stored and updated in off-chip memory such as DRAM, limiting the energy efficiency and training time. Analog non-volatile memories capable of storing and updating weights on-chip offer the possibility of accelerating DNN training. We harness the polarization switching dynamics of ferroelectric-FETs fabricated at Notre Dame to demonstrate such an analog synapse. We experimentally demonstrated FeFET-based analog synapse with various pulsing schemes such as amplitude modulation and pulse modulation, and CMOS-assisted hybrid precision scheme. We also developed a transient compact circuit model to describe FeFET dynamics. Recently, our lab also fabricated a novel ferroelectric-metal field effect transistor (FeMFET) analog weight cell by integrating of a ferroelectric (FE) capacitor on top of the gate of a conventional silicon high-K metal gate MOSFET transistor.

Papers:

1. Jerry, Matthew, et al. "Ferroelectric FET analog synapse for acceleration of deep neural network training." 2017 IEEE International Electron Devices Meeting (IEDM). IEEE, 2017.

2. Sun, Xiaoyu, et al. "Exploiting Hybrid Precision for Training and Inference: A 2T-1FeFET Based Analog Synaptic Weight Cell." 2018 IEEE International Electron Devices Meeting (IEDM). IEEE, 2018.

3. Ni, K., et al. "SoC logic compatible multi-bit FeMFET weight cell for neuromorphic applications." 2018 IEEE International Electron Devices Meeting (IEDM). IEEE, 2018.

4. Ni, Kai, et al. "Fundamental Understanding and Control of Device-to-Device Variation in Deeply Scaled Ferroelectric FETs." 2019 Symposium on VLSI Technology. IEEE, 2019.

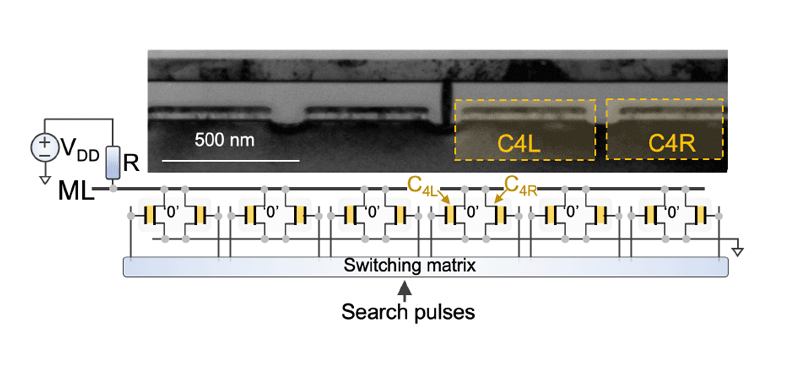

One-Shot Learning

One-Shot Learning

Deep neural networks are efficient at learning from large sets of labelled data, however, they struggle at adapting to previously unseen data. We adopt a novel approach towards generalized artificial intelligence by augmenting neural networks with an attentional memory so that they can draw upon already learnt knowledge patterns and adapt to new but similar tasks. We build FeFET based ternary content addressable memory (TCAM) cells that act as attentional memories, in which the distance between a query vector and each stored entry is computed within the memory itself, thus avoiding expensive data transfer in von-Neumann architecture.

Funded by:

NSF, DARPA, SRC JUMP